We use traffic cameras owned by the City of Austin to study pedestrian road useand identify potential safety concerns. Our approach automatically analyzes the content of video data from existing traffic cameras using a semi-automated processing pipeline powered by the state-of-art computing hardware and algorithms.

Transportation agencies often own extensive networks of monocular traffic cameras, which are typically used for traffic monitoring by officials and experts. While the information captured by these cameras can also be of great value in transportation planning and operations, such applications are less common due to the lack of scalable methods and tools for data processing and analysis. This project exemplifies how the value of existing traffic camera networks can be augmented using the latest computing techniques.

Vision Zero is a holistic strategy to end traffic-related fatalities and serious injuries, while increasing safe, healthy, and equitable mobility for all. Vision Zero holds that most traffic deaths and injuries are not a result of unavoidable accidents, but rather a preventable public health issue. This strategy was first implemented in Sweden in the 1990s and has led to major reductions of traffic deaths and serious injuries in many places around the world who have taken concrete actions to achieve its goals. The Vision Zero team uses a data-driven approach to inform Vision Zero efforts, specifically to identify locations where engineering, education, or enforcement interventions should be prioritized to have the most impact in improving safety at high crash locations.

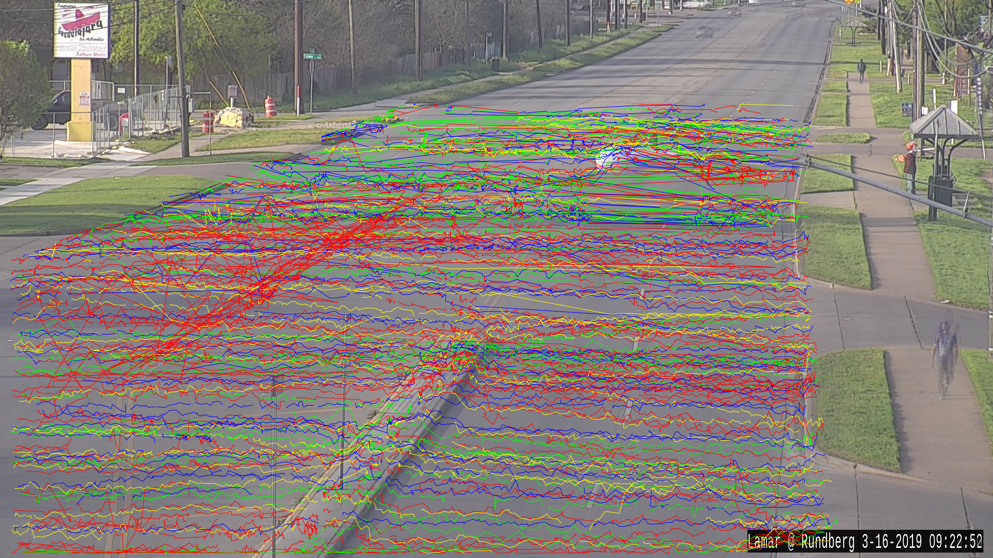

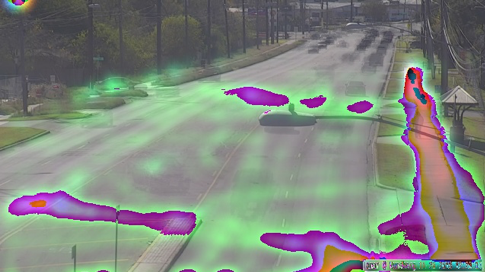

The City of Austin has over 400 CCTV cameras installed at intersections in the Austin area. These cameras are commonly used for manual traffic monitoring, with no long-term recording or archiving of videos. This website leverages the existing CCTV camera infrastructure to detect, track and evaluate pedestrian crossings at prioritized locations identified by the Vision Zero team. This joint research effort with The University of Texas Center for Transportation Research (CTR) and the Texas Advanced Computing Center (TACC) automatically analyzes video content from existing cameras using a semi-automated processing pipeline powered by state-of-the-art hardware and algorithms, to identify pedestrian crossing events and summarize them for each use case.

The video on the left shows an example of the object labeling and tracking algorithm applied to the intersection of Riverside Drive with Faro Drive. Pedestrians are identified and crossings tracked, as shown by the pink bounding boxes in this video. Note that other objects are also identified and tracked (car, bus, truck, bicycle, motorcycle, and traffic light). The data for all objects is stored with no personal identifiable information, and it can be used for future research efforts that have yet to be identified.